I’m currently in the throes of assembling data to use in forecasts on various forms of political change in countries worldwide for 2014. This labor-intensive process is the not-so-sexy side of “data science” that practitioners like to bang on about if you ask us, but I’m not going to do that here. Instead, I’m going to talk about how hard it is to find data sets that applied forecasters of rare events in international politics can even use in the first place. The steep data demands for predictive models mean that many of the things we’d like to include in our models get left out, and many of the data sets political scientists know and like aren’t useful to applied forecasters.

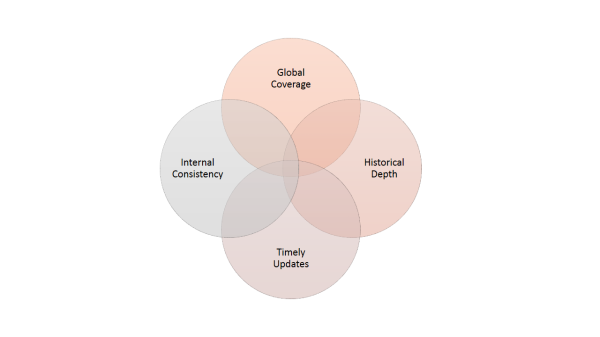

To see what I’m talking about, let’s assume we’re building a statistical model to forecast some rare event Y in countries worldwide, and we have reason to believe that some variable X should help us predict that Y. If we’re going to include X in our model, we’ll need data, but any old data won’t do. For a measure of X to be useful to an applied forecaster, it has to satisfy a few requirements. This Venn diagram summarizes the four I run into most often:

First, that measure of X has to be internally consistent. Validity is much less of a concern than it is in hypothesis-testing research, since we’re usually not trying to make causal inferences or otherwise build theory. If our measure of X bounces around arbitrarily, though, it’s not going to provide much a predictive signal, no matter how important the concept underlying X may be. Similarly, if the process by which that measure of X is generated keeps changing—say, national statistical agencies make idiosyncratic revisions to their accounting procedures, or coders keep changing their coding rules—then models based on the earlier versions will quickly break. If we know the source or shape of the variation, we might be able to adjust for it, but we aren’t always so lucky.

Second, to be useful in global forecasting, a data set has to offer global coverage, or something close to it. It’s really as simple as that. In the most commonly used statistical models, if a case is missing data on one or more of the inputs, it will be missing from the outputs, too. This is called listwise deletion, and it means we’ll get no forecast for cases that are missing values on any one of the predictor variables. Some machine-learning techniques can generate estimates in the face of missing data, and there are ways to work around listwise deletion in regression models, too (e.g., create categorical versions of continuous variables and treat missing values as another category). But those workarounds aren’t alchemy, and less information means less accurate forecasts.

Worse, the holes in our global data sets usually form a pattern, and that pattern is often correlated with the very things we’re trying to predict. For example, the poorest countries in the world are more likely to experience coups, but they are also more likely not to be able to afford the kind of bureaucracy that can produce high-quality economic statistics. Authoritarian regimes with frustrated citizens may be more likely to experience popular uprisings, but many autocrats won’t let survey research firms ask their citizens politically sensitive questions, and many citizens in those regimes would be reluctant to answer those questions candidly anyway. The fact that our data aren’t missing at random compounds the problem, leaving us without estimates for some cases and screwing up our estimates for the rest. Under these circumstances, it’s often best to omit the offending data set from our modeling process entirely, even if the X it’s measuring seems important.

Third and related to no. 2, if our events are rare, then our measure of X needs historical depth, too. To estimate the forecasting model, we want as rich a library of examples as we can get. For events as rare as onsets of violent rebellion or episodes of mass killing, which typically occur in just one or a few countries worldwide each year, we’ll usually need at least a few decades’ worth of data to start getting decent estimates on the things that differentiate the situations where the event occurs from the many others where it doesn’t. Without that historical depth, we run into the same missing-data problems I described in relation to global coverage.

I think this criterion is much tougher to satisfy than many people realize. In the past 10 or 20 years, statistical agencies, academic researchers, and non-governmental organizations have begun producing new or better data sets on all kinds of things that went unmeasured or poorly measured in the past—things like corruption or inflation or unemployment, to name a few that often come up in conversations about what predicts political instability and change. Those new data sets are great for expanding our view of the present, and they will be a boon to researchers of the future. Unfortunately, though, they can’t magically reconstruct the unobserved past, so they still aren’t very useful for predictive models of rare political events.

The fourth and final circle in that Venn diagram may be both the most important and the least appreciated by people who haven’t tried to produce statistical forecasts in real time: we need timely updates. If I can’t depend on the delivery of fresh data on X before or early in my forecasting window, then I can’t update my forecasts while they’re still relevant, and the model is effectively DOA. If X changes slowly, we can usually get away with using the last available observation until the newer stuff shows up. Population size and GDP per capita are a couple of variables for which this kind of extrapolation is generally fine. Likewise, if the variable changes predictably, we might use forecasts of X before the observed values become available. I sometimes do this with GDP growth rates. Observed data for one year aren’t available for many countries until deep into the next year, but the IMF produces decent forecasts of recent and future growth rates that can be used in the interim.

Maddeningly, though, this criterion alone renders many of the data sets scholars have painstakingly constructed for specific research projects useless for predictive modeling. For example, scholars in recent years have created numerous data sets to characterize countries’ national political regimes, a feature that scores of studies have associated with variation in the risk of many forms of political instability and change. Many of these “boutique” data sets on political regimes are based on careful research and coding procedures, cover the whole world, and reach at least several decades or more into the past. Only two of them, though—Polity IV and Freedom House’s Freedom in the World—are routinely updated. As much as I’d like to use unified democracy scores or measures of authoritarian regime type in my models, I can’t without painting myself into a forecasting corner, so I don’t.

As I hope this post has made clear, the set formed by the intersection of these four criteria is a tight little space. The practical requirements of applied forecasting mean that we have to leave out of our models many things that we believe might be useful predictors, no matter how important the relevant concepts might seem. They also mean that our predictive models on many different topics are often built from the same few dozen “usual suspects”—not because we want to, but because we don’t have much choice. Multiple imputation and certain machine-learning techniques can mitigate some of these problems, but they hardly eliminate them, and the missing information affects our forecasts either way. So the next time you’re reading about a global predictive model on international politics and wondering why it doesn’t include something “obvious” like unemployment or income inequality or survey results, know that these steep data requirements are probably the reason.

Brittius

/ November 24, 2013Reblogged this on Brittius.com.